| Figure 5: A partial derivation tree that justifies shifting |

Menhir Reference Manual |

Menhir is a parser generator. It turns high-level grammar specifications, decorated with “semantic actions” (fragments of executable code), into parsers. It is based on Knuth’s LR(1) parser construction technique [16]. It is strongly inspired by its precursors: yacc [12], ML-Yacc [24], and ocamlyacc [19], but offers a large number of minor and major improvements that make it a more modern tool.

This brief reference manual explains how to use Menhir. It does not attempt to explain context-free grammars, parsing, or the LR technique. Readers who have never used a parser generator are encouraged to read about these ideas first [1,2,9]. They are also invited to have a look at the demos directory in Menhir’s distribution.

Menhir currently offers a choice between several “back-ends”:

Potential users of Menhir should be warned that Menhir’s feature set is not completely stable. There is a tension between preserving a measure of compatibility with ocamlyacc, on the one hand, and introducing new ideas, on the other hand. Some aspects of the tool are potentially subject to incompatible changes. For instance, the “traditional” error-handling mechanism (which is based on the error token, see §10) is now regarded as deprecated and is likely to be entirely removed in the future.

Menhir is invoked as follows:

menhir option …option filename …filename

Each of the file names must end with .mly (unless --coq is used, in which case it must end with .vy) and denotes a partial grammar specification. These partial grammar specifications are joined (§5.1) to form a single, self-contained grammar specification, which is then processed. The following optional command line switches allow controlling many aspects of the process.

--base basename. This switch controls the base name of the .ml and .mli files that are produced. That is, the tool will produce files named basename.ml and basename.mli. Note that basename can contain occurrences of the / character, so it really specifies a path and a base name. When only one filename is provided on the command line, the default basename is obtained by depriving filename of its final .mly suffix. When multiple file names are provided on the command line, no default base name exists, so that the --base switch must be used.

--cmly. This switch causes Menhir to produce a .cmly file in addition to its normal operation. This file contains a (binary-form) representation of the grammar and automaton (see §13.1).

--comment. This switch causes a few comments to be inserted into the OCaml code that is written to the .ml file.

--compare-errors filename1 --compare-errors filename2. Two such switches must always be used in conjunction so as to specify the names of two .messages files, filename1 and filename2. Each file is read and internally translated to a mapping of states to messages. Menhir then checks that the left-hand mapping is a subset of the right-hand mapping. This feature is typically used in conjunction with --list-errors to check that filename2 is complete (that is, covers all states where an error can occur). For more information, see §11.

--compile-errors filename. This switch causes Menhir to read the file filename, which must obey the .messages file format, and to compile it to an OCaml function that maps a state number to a message. The OCaml code is sent to the standard output channel. At the same time, Menhir checks that the collection of input sentences in the file filename is correct and irredundant. For more information, see §11.

--coq. This switch causes Menhir to produce Coq code. See §12.

--coq-lib-no-path. This switch indicates that references to the Coq library MenhirLib should not be qualified. This was the default behavior of Menhir prior to 2018/05/30. This switch is provided for compatibility, but normally should not be used.

--coq-lib-path path. This switch allows specifying under what name (or path) the Coq support library is known to Coq. When Menhir runs in --coq mode, the generated parser contains references to several modules in this library. This path is used to qualify these references. Its default value is MenhirLib.

--coq-no-actions. (Used in conjunction with --coq.) This switch

causes the semantic actions present in the .vy file to be ignored and

replaced with tt, the unique inhabitant of Coq’s unit type. This

feature can be used to test the Coq back-end with a standard grammar, that is, a

grammar that contains OCaml semantic actions. Just rename the file from

.mly to .vy and set this switch.

--coq-no-complete. (Used in conjunction with --coq.) This switch disables the generation of the proof of completeness of the parser (§12). This can be necessary because the proof of completeness is possible only if the grammar has no conflict (not even a benign one, in the sense of §6.1). This can be desirable also because, for a complex grammar, completeness may require a heavy certificate and its validation by Coq may take time.

--coq-no-version-check. (Used in conjunction with --coq.) This switch prevents the generation of the check that verifies that the versions of Menhir and MenhirLib match.

--depend. See §14.

--dump. This switch causes a description of the automaton to be written to the file basename.automaton. This description is written after benign conflicts have been resolved, before severe conflicts are resolved (§6), and before extra reductions are introduced (§4.1.8).

--dump-menhirLib directory. This command causes Menhir to write the source code of the runtime library MenhirLib in the directory directory, and exit. The directory directory must exist already. Two files, named menhirLib.ml and menhirLib.mli, are created in it. This command can be useful to users with special needs who wish to use MenhirLib but do not want to rely on it being installed somewhere in the file system.

--dump-resolved. This command line switch causes a description of the automaton to be written to the file basename.automaton.resolved. This description is written after all conflicts have been resolved (§6) and after extra reductions have been introduced (§4.1.8).

--echo-errors filename. This switch causes Menhir to read the .messages file filename and to produce on the standard output channel just the input sentences. (That is, all messages, blank lines, and comments are filtered out.) For more information, see §11.

--echo-errors-concrete filename. This switch causes Menhir to

read the .messages file filename and to produce on the standard output

channel just the input sentences. Each sentence is followed with a comment of

the form ## Concrete syntax: ... that shows this sentence in concrete

syntax. This comment is printed only if the user has defined an alias for

every token (§4.1.3).

--exn-carries-state. This switch causes the exception Error to carry an integer parameter, as follows: exception Error of int. When the parser detects a syntax error, the number of the current state is reported in this way. This allows the caller to select a suitable syntax error message, along the lines described in §11. This switch is currently supported by the code back-end only. Thus, it is incompatible with --table. It is also incompatible with --fixed-exception.

--explain. This switch causes conflict explanations to be written to the file basename.conflicts. See also §6.

--external-tokens T. This switch causes the definition of the token type to be omitted in basename.ml and basename.mli. Instead, the generated parser relies on the type T.token, where T is an OCaml module name. It is up to the user to define module T and to make sure that it exports a suitable token type. Module T can be hand-written. It can also be automatically generated out of a grammar specification using the --only-tokens switch.

--fixed-exception. This switch causes the exception Error to be internally defined as a synonym for Parsing.Parse_error. This means that an exception handler that catches Parsing.Parse_error will also catch the generated parser’s Error. This helps increase Menhir’s compatibility with ocamlyacc. There is otherwise no reason to use this switch.

--infer, --infer-write-query, --infer-read-reply. See §14.

--inspection. This switch requires --table. It causes Menhir to generate not only the monolithic and incremental APIs (§9.1, §9.2), but also the inspection API (§9.3). Activating this switch causes a few more tables to be produced, resulting in somewhat larger code size.

--interpret. This switch causes Menhir to act as an interpreter, rather than as a compiler. No OCaml code is generated. Instead, Menhir reads sentences off the standard input channel, parses them, and displays outcomes. This switch can be usefully combined with --trace. For more information, see §8.

--interpret-error. This switch is analogous to --interpret, except Menhir expects every sentence to cause an error on its last token, and displays information about the state in which the error is detected, in the .messages file format. For more information, see §11.

--interpret-show-cst. This switch, used in conjunction with --interpret, causes Menhir to display a concrete syntax tree when a sentence is successfully parsed. For more information, see §8.

--list-errors. This switch causes Menhir to produce (on the standard output channel) a complete list of input sentences that cause an error, in the .messages file format. For more information, see §11.

--log-automaton level. When level is nonzero, this switch causes some information about the automaton to be logged to the standard error channel.

--log-code level. When level is nonzero, this switch causes some information about the generated OCaml code to be logged to the standard error channel.

--log-grammar level. When level is nonzero, this switch causes some information about the grammar to be logged to the standard error channel. When level is 2, the nullable, FIRST, and FOLLOW tables are displayed.

--merge-errors filename1 --merge-errors filename2. Two such switches must always be used in conjunction so as to specify the names of two .messages files, filename1 and filename2. This command causes Menhir to merge these two .messages files and print the result on the standard output channel. For more information, see §11.

--no-dollars. This switch disallows the use of positional keywords of the form $i.

--no-inline. This switch causes all %inline keywords in the grammar specification to be ignored. This is especially useful in order to understand whether these keywords help solve any conflicts.

--no-stdlib. This switch instructs Menhir to not use its standard library (§5.4).

--ocamlc command. See §14.

--ocamldep command. See §14.

--only-preprocess. This switch causes the grammar specifications to be transformed up to the point where the automaton’s construction can begin. The grammar specifications whose names are provided on the command line are joined (§5.1); all parameterized nonterminal symbols are expanded away (§5.2); type inference is performed, if --infer is enabled; all nonterminal symbols marked %inline are expanded away (§5.3). This yields a single, monolithic grammar specification, which is printed on the standard output channel.

--only-tokens. This switch causes the %token declarations in the grammar specification to be translated into a definition of the token type, which is written to the files basename.ml and basename.mli. No code is generated. This is useful when a single set of tokens is to be shared between several parsers. The directory demos/calc-two contains a demo that illustrates the use of this switch.

--random-seed seed. This switch allows the user to set a random seed. This seed influences the random sentence generator.

--random-self-init. This switch asks Menhir to choose a random seed in a nondeterministic (system-dependent) way. This seed influences the random sentence generator.

--random-sentence-length length. This switch allows the user to set a goal length for the random sentence generator. The generated sentences will normally have length at most length.

--random-sentence symbol. This switch asks Menhir to produce and display a random sentence that is generated by the nonterminal symbol symbol. The sentence is displayed as a sequence of terminal symbols, separated with spaces. Each terminal symbol is represented by its name.

The generated sentence is valid with respect to the grammar. If the grammar is in the class LR(1) (that is, if it has no conflicts at all), then the generated sentence is also accepted by the automaton. However, if the grammar has conflicts, then it may be the case that the sentence is rejected by the automaton.

The distribution of sentences is not uniform; some sentences (or fragments of sentences) may be more likely to appear than others.

The productions that involve the error pseudo-token are ignored by the random sentence generator.

--random-sentence-concrete symbol. This switch asks Menhir to produce and display a random sentence that is generated by the nonterminal symbol symbol. The sentence is displayed as a sequence of terminal symbols, separated with spaces. Each terminal symbol is represented by its token alias (§4.1.3). This assumes that a token alias has been defined for every token.

--raw-depend. See §14.

--reference-graph. This switch causes a description of the grammar’s dependency graph to be written to the file basename.dot. The graph’s vertices are the grammar’s nonterminal symbols. There is a directed edge from vertex A to vertex B if the definition of A refers to B. The file is in a format that is suitable for processing by the graphviz toolkit.

--require-aliases. This switch causes Menhir to check that a token alias (§4.1.3) has been defined for every token. There is no requirement for this alias to be actually used; it must simply exist. A missing alias gives rise to a warning (and, in --strict mode, to an error).

--stdlib directory. This switch exists only for backwards compatibility and is ignored. It may be removed in the future.

--strategy strategy. This switch selects an error-handling strategy, to be used by the code back-end, the table back-end, and the reference interpreter. The available strategies are legacy and simplified. (However, at the time of writing, the code back-end does not yet support the simplified strategy.) When this switch is omitted, the legacy strategy is used. The choice of a strategy matters only if the grammar uses the error token. For more details, see §10.

--strict. This switch causes several warnings about the grammar and about the automaton to be considered errors. This includes warnings about useless precedence declarations, non-terminal symbols that produce the empty language, unreachable non-terminal symbols, productions that are never reduced, conflicts that are not resolved by precedence declarations, end-of-stream conflicts, and missing token aliases.

--suggest-*. See §14.

--table. This switch causes Menhir to use the table back-end, as opposed to the code back-end, which is the default. When --table is used, Menhir produces significantly more compact and somewhat slower parsers. See §16 for a speed comparison.

The table back-end produces rather compact tables, which are analogous to those produced by yacc, bison, or ocamlyacc. These tables are not quite stand-alone: they are exploited by an interpreter, which is shipped as part of the support library MenhirLib. For this reason, when --table is used, MenhirLib must be made visible to the OCaml compilers, and must be linked into your executable program. The --suggest-* switches, described above, help do this.

The code back-end compiles the LR automaton directly into a nest of mutually recursive OCaml functions. In that case, MenhirLib is not required.

The incremental API (§9.2) and the inspection API (§9.3) are made available only by the table back-end.

--timings. This switch causes internal timing information to be sent to the standard error channel.

--timings-to filename. This switch causes internal timing information to be written to the file filename.

--trace. This switch causes tracing code to be inserted into the generated parser, so that, when the parser is run, its actions are logged to the standard error channel. This is analogous to ocamlrun’s p=1 parameter, except this switch must be enabled at compile time: one cannot selectively enable or disable tracing at runtime.

--unparsing. This switch requires --table. It causes Menhir to generate the unparsing API (§9.4). When this switch is enabled, the generated parser must be linked with the libraries menhirLib and menhirCST.

--unused-precedence-levels. This switch suppresses all warnings about useless %left, %right, %nonassoc and %prec declarations.

--unused-token symbol. This switch suppresses the warning that is normally emitted when Menhir finds that the terminal symbol symbol is unused.

--unused-tokens. This switch suppresses all of the warnings that are normally emitted when Menhir finds that some terminal symbols are unused.

--update-errors filename. This switch causes Menhir to read the .messages file filename and to produce on the standard output channel a new .messages file that is identical, except the auto-generated comments have been re-generated. For more information, see §11.

--version. This switch causes Menhir to print its own version number and exit.

A semicolon character (;) may appear after a declaration (§4.1).

An old-style rule (§4.2) may be terminated with a semicolon. Also, within an old-style rule, each producer (§4.2.3) may be terminated with a semicolon.

A new-style rule (§4.3) must not be terminated with a semicolon. Within such a rule, the elements of a sequence must be separated with semicolons.

Semicolons are not allowed to appear anywhere except in the places mentioned above. This is in contrast with ocamlyacc, which views semicolons as insignificant, just like whitespace.

Identifiers (id) coincide with OCaml identifiers, except they are not allowed to contain the quote (’) character. Following OCaml, identifiers that begin with a lowercase letter (lid) or with an uppercase letter (uid) are distinguished.

A quoted identifier qid is a string enclosed in double quotes. Such a string cannot contain a double quote or a backslash. Quoted identifiers are used as token aliases (§4.1.3).

Comments are C-style (surrounded with /* and */, cannot be nested), C++-style (announced by // and extending until the end of the line), or OCaml-style (surrounded with (* and *), can be nested). Of course, inside OCaml code, only OCaml-style comments are allowed.

OCaml type expressions are surrounded with < and >. Within such expressions, all references to type constructors (other than the built-in list, option, etc.) must be fully qualified.

specification ::= declaration … declaration %% rule … rule [ %% OCaml code ] declaration ::= %{ OCaml code %} %parameter < uid : OCaml module type > %token [ < OCaml type > ] uid [ qid ] … uid [ qid ] %nonassoc uid … uid %left uid … uid %right uid … uid %type < OCaml type > lid … lid %start [ < OCaml type > ] lid … lid %attribute actual … actual attribute … attribute % attribute %on_error_reduce lid … lid attribute ::= [@ name payload ] old syntax — rule ::= [ %public ] [ %inline ] lid [ ( id, …, id ) ] : [ | ] group | … | group group ::= production | … | production { OCaml code } [ %prec id ] production ::= producer … producer [ %prec id ] producer ::= [ lid = ] actual actual ::= id [ ( actual, …, actual ) ] actual ( ? ∣ +∣ * )group | … | group new syntax — rule ::= [ %public ] let lid [ ( id, …, id ) ] ( := ∣ == ) expression expression ::= [ | ] expression | … | expression [ pattern = ] expression ; expression id [ ( expression , …, expression ) ] expression ( ? ∣ +∣ * ){ OCaml code } [ %prec id ] < OCaml id > [ %prec id ] pattern ::= lid ∣ _ ∣ ∣ ( pattern , …, pattern )

Figure 1: Syntax of grammar specifications

The syntax of grammar specifications appears in Figure 1. The places where attributes can be attached are not shown; they are documented separately (§13.2). A grammar specification begins with a sequence of declarations (§4.1), ended by a mandatory %% keyword. Following this keyword, a sequence of rules is expected. Each rule defines a nonterminal symbol lid, whose name must begin with a lowercase letter. A rule is expressed either in the “old syntax” (§4.2) or in the “new syntax” (§4.3), which is slightly more elegant and powerful.

A header is a piece of OCaml code, surrounded with %{ and %}. It is copied verbatim at the beginning of the .ml file. It typically contains OCaml open directives and function definitions for use by the semantic actions. If a single grammar specification file contains multiple headers, their order is preserved. However, when two headers originate in distinct grammar specification files, the order in which they are copied to the .ml file is unspecified.

It is important to note that the header is copied by Menhir only to the .ml file, not to the .mli file. Therefore, it should not contain declarations that affect the meaning of the types that appear in the .mli file. Here are two problems that people commonly run into:

open Foo in

the header and declaring %type<t> bar, where the type t is

defined in the module Foo, will not work.

Solution: Write %type<Foo.t> bar.

module F = Foo

in the header can create a problem,

because it allows OCaml to infer that the symbol bar has type

F.t. Menhir relies on this information without realizing that

F is a local name, so in the end, the .mli file contains a reference

to F.t that does not make sense. The problem persists even if one

declares %type<Foo.t> bar, because Menhir prefers the type inferred

by OCaml over the type declared by the user.

Solution:

Wrap the module alias in an anonymous structure, like so:

open struct module F = Foo end.

(This requires OCaml 4.08 or newer.)

This makes the name F available in your code,

but forces OCaml to prefer the name Foo in the types that it infers.

A declaration of the form:

%parameter < uid : OCaml module type >

causes the entire parser to become parameterized over the OCaml module uid, that is, to become an OCaml functor. The directory demos/calc-param contains a demo that illustrates the use of this switch.

If a single specification file contains multiple %parameter declarations, their order is preserved, so that the module name uid introduced by one declaration is effectively in scope in the declarations that follow. When two %parameter declarations originate in distinct grammar specification files, the order in which they are processed is unspecified. Last, %parameter declarations take effect before %{ … %}, %token, %type, or %start declarations are considered, so that the module name uid introduced by a %parameter declaration is effectively in scope in all %{ … %}, %token, %type, or %start declarations, regardless of whether they precede or follow the %parameter declaration. This means, in particular, that the side effects of an OCaml header are observed only when the functor is applied, not when it is defined.

A declaration of the form:

%token [ < OCaml type > ] uid1 [ qid1 ] … uidn [ qidn ]

defines the identifiers uid1, …, uidn as tokens, that is, as terminal symbols in the grammar specification and as data constructors in the token type.

If an OCaml type t is present, then these tokens are considered to carry a semantic value of type t, otherwise they are considered to carry no semantic value.

If a quoted identifier qidi is present, then it is considered an alias for the terminal symbol uidi. (This feature, known as “token aliases”, is borrowed from Bison.) Throughout the grammar, the quoted identifier qidi is then synonymous with the identifier uidi. For example, if one declares:

%token PLUS "+"

then the quoted identifier "+" stands for the terminal symbol PLUS throughout the grammar. An example of the use of token aliases appears in the directory demos/calc-alias. Token aliases can be used to improve the readability of a grammar. One must keep in mind, however, that they are just syntactic sugar: they are not interpreted in any way by Menhir or conveyed to tools like ocamllex. They could be considered confusing by a reader who mistakenly believes that they are interpreted as string literals.

A declaration of one of the following forms:

%nonassoc uid1 … uidn

%left uid1 … uidn

%right uid1 … uidn

assigns both a priority level and an associativity status to the symbols uid1, …, uidn. The priority level assigned to uid1, …, uidn is not defined explicitly: instead, it is defined to be higher than the priority level assigned by the previous %nonassoc, %left, or %right declaration, and lower than that assigned by the next %nonassoc, %left, or %right declaration. The symbols uid1, …, uidn can be tokens (defined elsewhere by a %token declaration) or dummies (not defined anywhere). Both can be referred to as part of %prec annotations. Associativity status and priority levels allow shift/reduce conflicts to be silently resolved (§6).

A declaration of the form:

%type < OCaml type > lid1 … lidn

assigns an OCaml type to each of the nonterminal symbols lid1, …, lidn. For start symbols, providing an OCaml type is mandatory, but is usually done as part of the %start declaration. For other symbols, it is optional. Providing type information can improve the quality of OCaml’s type error messages.

A %type declaration may concern not only a nonterminal symbol, such as, say, expression, but also a fully applied parameterized nonterminal symbol, such as list(expression) or separated_list(COMMA, option(expression)).

The types provided as part of %type declarations are copied verbatim to the .ml and .mli files. In contrast, headers (§4.1.1) are copied to the .ml file only. For this reason, the types provided as part of %type declarations must make sense both in the presence and in the absence of these headers. They should typically be fully qualified types.

A declaration of the form:

%start [ < OCaml type > ] lid1 … lidn

declares the nonterminal symbols lid1, …, lidn to be start symbols. Each such symbol must be assigned an OCaml type either as part of the %start declaration or via separate %type declarations. Each of lid1, …, lidn becomes the name of a function whose signature is published in the .mli file and that can be used to invoke the parser.

Attribute declarations of the form %attribute actual … actual attribute … attribute and % attribute are explained in §13.2.

A declaration of the form:

%on_error_reduce lid1 … lidn

marks the nonterminal symbols lid1, …, lidn as potentially eligible for reduction when an invalid token is found. This may cause one or more extra reduction steps to be performed before the error is detected.

More precisely, this declaration affects the automaton as follows. Let us say that a production lid → … is “reducible on error” if its left-hand symbol lid appears in a %on_error_reduce declaration. After the automaton has been constructed and after any conflicts have been resolved, in every state s, the following algorithm is applied:

If step 3 above is executed in state s, then an error can never be detected in state s, since all error actions in state s are replaced with reduce actions. Error detection is deferred: at least one reduction takes place before the error is detected. It is a “spurious” reduction: in a canonical LR(1) automaton, it would not take place.

An %on_error_reduce declaration does not affect the language that is accepted by the automaton. It does not affect the location where an error is detected. It is used to control in which state an error is detected. If used wisely, it can make errors easier to report, because they are detected in a state for which it is easier to write an accurate diagnostic message (§11.3).

Like a %type declaration, an %on_error_reduce declaration may concern not only a nonterminal symbol, such as, say, expression, but also a fully applied parameterized nonterminal symbol, such as list(expression) or separated_list(COMMA, option(expression)).

The “on-error-reduce-priority” of a production is that of its left-hand symbol. The “on-error-reduce-priority” of a nonterminal symbol is determined implicitly by the order of %on_error_reduce declarations. In the declaration %on_error_reduce lid1 … lidn, the symbols lid1, …, lidn have the same “on-error-reduce-priority”. They have higher “on-error-reduce-priority” than the symbols listed in previous %on_error_reduce declarations, and lower “on-error-reduce-priority” than those listed in later %on_error_reduce declarations.

In its simplest form, a rule begins with the nonterminal symbol lid, followed by a colon character (:), and continues with a sequence of production groups (§4.2.1). Each production group is preceded with a vertical bar character (|); the very first bar is optional. The meaning of the bar is choice: the nonterminal symbol id develops to either of the production groups. We defer explanations of the keyword %public (§5.1), of the keyword %inline (§5.3), and of the optional formal parameters ( id, …, id ) (§5.2).

In its simplest form, a production group consists of a single production (§4.2.2), followed by an OCaml semantic action (§4.2.1) and an optional %prec annotation (§4.2.1). A production specifies a sequence of terminal and nonterminal symbols that should be recognized, and optionally binds identifiers to their semantic values.

A semantic action is a piece of OCaml code that is executed in order to assign a semantic value to the nonterminal symbol with which this production group is associated. A semantic action can refer to the (already computed) semantic values of the terminal or nonterminal symbols that appear in the production via the semantic value identifiers bound by the production.

For compatibility with ocamlyacc, semantic actions can also refer to unnamed semantic values via positional keywords of the form $1, $2, etc. This style is discouraged. (It is in fact forbidden if --no-dollars is turned on.) Furthermore, as a positional keyword of the form $i is internally rewritten as _i, the user should not use identifiers of the form _i.

An annotation of the form %prec id indicates that the precedence level of the production group is the level assigned to the symbol id via a previous %nonassoc, %left, or %right declaration (§4.1.4). In the absence of a %prec annotation, the precedence level assigned to each production is the level assigned to the rightmost terminal symbol that appears in it. It is undefined if the rightmost terminal symbol has an undefined precedence level or if the production mentions no terminal symbols at all. The precedence level assigned to a production is used when resolving shift/reduce conflicts (§6).

If multiple productions are present in a single group, then the semantic action and precedence annotation are shared between them. This short-hand effectively allows several productions to share a semantic action and precedence annotation without requiring textual duplication. It is legal only when every production binds exactly the same set of semantic value identifiers and when no positional semantic value keywords ($1, etc.) are used.

A production is a sequence of producers (§4.2.3), optionally followed by a %prec annotation (§4.2.1). If a precedence annotation is present, it applies to this production alone, not to other productions in the production group. It is illegal for a production and its production group to both carry %prec annotations.

A producer is an actual (§4.2.4), optionally preceded with a binding of a semantic value identifier, of the form lid =. The actual specifies which construction should be recognized and how a semantic value should be computed for that construction. The identifier lid, if present, becomes bound to that semantic value in the semantic action that follows. Otherwise, the semantic value can be referred to via a positional keyword ($1, etc.).

In its simplest form, an actual is just a terminal or nonterminal symbol id. If it is a parameterized non-terminal symbol (see §5.2), then it should be applied: id( actual, …, actual ) .

An actual may be followed with a modifier (?,

, or *). This is explained further on (see §5.2 and Figure 2).

An actual may also be an “anonymous rule”. In that case, one writes just the rule’s right-hand side, which takes the form group | … | group. (This form is allowed only as an argument in an application.) This form is expanded on the fly to a definition of a fresh non-terminal symbol, which is declared %inline. For instance, providing an anonymous rule as an argument to list:

list ( e = expression; SEMICOLON { e } )

is equivalent to writing this:

list ( expression_SEMICOLON )

where the non-terminal symbol expression_SEMICOLON is chosen fresh and is defined as follows:

%inline expression_SEMICOLON: | e = expression; SEMICOLON { e }

Please be warned that the new syntax is considered experimental and is subject to change in the future.

In its simplest form, a rule takes the form let lid := expression. Its left-hand side lid is a nonterminal symbol; its right-hand side is an expression. Such a rule defines an ordinary nonterminal symbol, while the alternate form let lid == expression defines an %inline nonterminal symbol (§5.3), that is, a macro. A rule can be preceded with the keyword %public (§5.1) and can be parameterized with a tuple of formal parameters ( id, …, id ) (§5.2). The various forms of expressions, listed in Figure 1, are:

The syntax of expressions, as presented in Figure 1, seems more permissive than it really is. In reality, a choice cannot be nested inside a sequence; a sequence cannot be nested in the left-hand side of a sequence; a semantic action cannot appear in the left-hand side of a sequence. (Thus, there is a stratification in three levels: choice expressions, sequence expressions, and atomic expressions, which corresponds roughly to the stratification of rules, productions, and producers in the old syntax.) Furthermore, an expression between parentheses ( expression ) is not a valid expression. To surround an expression with parentheses, one must write either midrule ( expression ) or endrule ( expression ) ; see §5.4 and Figure 3.

When a complex expression (e.g., a choice or a sequence) is placed in parentheses, as in id ( expression ), this is equivalent to using id ( s ) , where the fresh symbol s is declared as a synonym for this expression, via the declaration let s == expression. This idiom is also known as an anonymous rule (§4.2.4).

As an example of a rule in the new syntax, the parameterized nonterminal symbol option, which is part of Menhir’s standard library (§5.4), can be defined as follows:

let option(x) := | x = x ; { None } | x = x ; { Some x }

Using a pun, it can also be written as follows:

let option(x) := | = x ; { None }| = x ; { Some x }

Using a pun and a point-free semantic action, it can also be expressed as follows:

let option(x) := | = x ; { None }| = x ; < Some >

As another example, the parameterized symbol delimited, also part of Menhir’s standard library (§5.4), can be defined in the new syntax as follows:

let delimited(opening, x, closing) == opening ; = x ; closing ; <>

The use of == indicates that this is a macro, i.e., an %inline nonterminal symbol (see §5.3). The identity semantic action <> is here synonymous with { x }.

Other illustrations of the new syntax can be found in the directories demos/calc-new-syntax and demos/calc-ast.

Grammar specifications can be split over multiple files. When Menhir is invoked with multiple argument file names, it considers each of these files as a partial grammar specification, and joins these partial specifications in order to obtain a single, complete specification.

This feature is intended to promote a form a modularity. It is hoped that, by splitting large grammar specifications into several “modules”, they can be made more manageable. It is also hoped that this mechanism, in conjunction with parameterization (§5.2), will promote sharing and reuse. It should be noted, however, that this is only a weak form of modularity. Indeed, partial specifications cannot be independently processed (say, checked for conflicts). It is necessary to first join them, so as to form a complete grammar specification, before any kind of grammar analysis can be done.

This mechanism is, in fact, how Menhir’s standard library (§5.4) is made available: even though its name does not appear on the command line, it is automatically joined with the user’s explicitly-provided grammar specifications, making the standard library’s definitions globally visible.

A partial grammar specification, or module, contains declarations and rules, just like a complete one: there is no visible difference. Of course, it can consist of only declarations, or only rules, if the user so chooses. (Don’t forget the mandatory %% keyword that separates declarations and rules. It must be present, even if one of the two sections is empty.)

It should be noted that joining is not a purely textual process. If two modules happen to define a nonterminal symbol by the same name, then it is considered, by default, that this is an accidental name clash. In that case, each of the two nonterminal symbols is silently renamed so as to avoid the clash. In other words, by default, a nonterminal symbol defined in module A is considered private, and cannot be defined again, or referred to, in module B.

Naturally, it is sometimes desirable to define a nonterminal symbol N in module A and to refer to it in module B. This is permitted if N is public, that is, if either its definition carries the keyword %public or N is declared to be a start symbol. A public nonterminal symbol is never renamed, so it can be referred to by modules other than its defining module.

In fact, it is permitted to split the definition of a public nonterminal symbol, over multiple modules and/or within a single module. That is, a public nonterminal symbol N can have multiple definitions, within one module and/or in distinct modules. All of these definitions are joined using the choice (|) operator. For instance, in the grammar of a programming language, the definition of the nonterminal symbol expression could be split into multiple modules, where one module groups the expression forms that have to do with arithmetic, one module groups those that concern function definitions and function calls, one module groups those that concern object definitions and method calls, and so on.

Another use of modularity consists in placing all %token declarations in one module, and the actual grammar specification in another module. The module that contains the token definitions can then be shared, making it easier to define multiple parsers that accept the same type of tokens. (On this topic, see demos/calc-two.)

A rule (that is, the definition of a nonterminal symbol) can be parameterized over an arbitrary number of symbols, which are referred to as formal parameters.

For instance, here is the definition of the parameterized nonterminal symbol option, taken from the standard library (§5.4):

%public option(X): | { None } | x = X { Some x }

This definition states that option(X) expands to either the empty string, producing the semantic value None, or to the string X, producing the semantic value Some x, where x is the semantic value of X. In this definition, the symbol X is abstract: it stands for an arbitrary terminal or nonterminal symbol. The definition is made public, so option can be referred to within client modules.

A client who wishes to use option simply refers to it, together with an actual parameter – a symbol that is intended to replace X. For instance, here is how one might define a sequence of declarations, preceded with optional commas:

declarations: | { [] } | ds = declarations; option(COMMA); d = declaration { d :: ds }

This definition states that declarations expands either to the empty string or to declarations followed by an optional comma followed by declaration. (Here, COMMA is presumably a terminal symbol.) When this rule is encountered, the definition of option is instantiated: that is, a copy of the definition, where COMMA replaces X, is produced. Things behave exactly as if one had written:

optional_comma: | { None } | x = COMMA { Some x } declarations: | { [] } | ds = declarations; optional_comma; d = declaration { d :: ds }

Note that, even though COMMA presumably has been declared as a token with no semantic value, writing x = COMMA is legal, and binds x to the unit value. This design choice ensures that the definition of option makes sense regardless of the nature of X: that is, X can be instantiated with a terminal symbol, with or without a semantic value, or with a nonterminal symbol.

In general, the definition of a nonterminal symbol N can be parameterized with an arbitrary number of formal parameters. When N is referred to within a production, it must be applied to the same number of actuals. In general, an actual is:

For instance, here is a rule whose single production consists of a single producer, which contains several, nested actuals. (This example is discussed again in §5.4.)

plist(X): | xs = loption(delimited(LPAREN, separated_nonempty_list(COMMA, X), RPAREN)) { xs }

actual? is syntactic sugar for option(actual) actual +is syntactic sugar for nonempty_list(actual) actual* is syntactic sugar for list(actual)

Figure 2: Syntactic sugar for simulating regular expressions, also known as EBNF

Applications of the parameterized nonterminal symbols option, nonempty_list, and list, which are defined in the standard library (§5.4), can be written using a familiar, regular-expression like syntax (Figure 2).

A formal parameter can itself expect parameters. For instance, here is a rule that defines the syntax of procedures in an imaginary programming language:

procedure(list): | PROCEDURE ID list(formal) SEMICOLON block SEMICOLON { … }

This rule states that the token ID, which represents the name of the procedure, should be followed with a list of formal parameters. (The definitions of the nonterminal symbols formal and block are not shown.) However, because list is a formal parameter, as opposed to a concrete nonterminal symbol defined elsewhere, this definition does not specify how the list is laid out: which token, if any, is used to separate, or terminate, list elements? is the list allowed to be empty? and so on. A more concrete notion of procedure is obtained by instantiating the formal parameter list: for instance, procedure(plist), where plist is the parameterized nonterminal symbol defined earlier, is a valid application.

Definitions and uses of parameterized nonterminal symbols are checked for consistency before they are expanded away. In short, it is checked that, wherever a nonterminal symbol is used, it is supplied with actual arguments in appropriate number and of appropriate nature. This guarantees that expansion of parameterized definitions terminates and produces a well-formed grammar as its outcome.

It is well-known that the following grammar of arithmetic expressions does not work as expected: that is, in spite of the priority declarations, it has shift/reduce conflicts.

%token < int > INT %token PLUS TIMES %left PLUS %left TIMES %% expression: | i = INT { i } | e = expression; o = op; f = expression { o e f } op: | PLUS { ( + ) } | TIMES { ( * ) }

The trouble is, the precedence level of the production expression → expression op expression is undefined, and there is no sensible way of defining it via a %prec declaration, since the desired level really depends upon the symbol that was recognized by op: was it PLUS or TIMES?

The standard workaround is to abandon the definition of op as a separate nonterminal symbol, and to inline its definition into the definition of expression, like this:

expression: | i = INT { i } | e = expression; PLUS; f = expression { e + f } | e = expression; TIMES; f = expression { e * f }

This avoids the shift/reduce conflict, but gives up some of the original specification’s structure, which, in realistic situations, can be damageable. Fortunately, Menhir offers a way of avoiding the conflict without manually transforming the grammar, by declaring that the nonterminal symbol op should be inlined:

expression: | i = INT { i } | e = expression; o = op; f = expression { o e f } %inline op: | PLUS { ( + ) } | TIMES { ( * ) }

The %inline keyword causes all references to op to be replaced with its definition. In this example, the definition of op involves two productions, one that develops to PLUS and one that expands to TIMES, so every production that refers to op is effectively turned into two productions, one that refers to PLUS and one that refers to TIMES. After inlining, op disappears and expression has three productions: that is, the result of inlining is exactly the manual workaround shown above.

In some situations, inlining can also help recover a slight efficiency margin. For instance, the definition:

%inline plist(X): | xs = loption(delimited(LPAREN, separated_nonempty_list(COMMA, X), RPAREN)) { xs }

effectively makes plist(X) an alias for the right-hand side loption(…). Without the %inline keyword, the language recognized by the grammar would be the same, but the LR automaton would probably have one more state and would perform one more reduction at run time.

The %inline keyword does not affect the computation of positions (§7). The same positions are computed, regardless of where %inline keywords are placed.

If the semantic actions have side effects, the %inline keyword can affect the order in which these side effects take place. In the example of op and expression above, if for some reason the semantic action associated with op has a side effect (such as updating a global variable, or printing a message), then, by inlining op, we delay this side effect, which takes place after the second operand has been recognized, whereas in the absence of inlining it takes place as soon as the operator has been recognized.

Name Recognizes Produces Comment endrule(X) X α, if X : α (inlined) midrule(X) X α, if X : α option(X) є | X α option, if X : α (also X?) ioption(X) є | X α option, if X : α (inlined) boption(X) є | X bool loption(X) є | X α list, if X : α list pair(X, Y) X Y α×β, if X : α and Y : β separated_pair(X, sep, Y) X sep Y α×β, if X : α and Y : β preceded(opening, X) opening X α, if X : α terminated(X, closing) X closing α, if X : α delimited(opening, X, closing) opening X closing α, if X : α list(X) a possibly empty sequence of X’s α list, if X : α (also X*) nonempty_list(X) a nonempty sequence of X’s α list, if X : α (also X +)separated_list(sep, X) a possibly empty sequence of X’s separated with sep’s α list, if X : α separated_nonempty_list(sep, X) a nonempty sequence of X’s separated with sep’s α list, if X : α rev(X) X α list, if X : α list (inlined) flatten(X) X α list, if X : α list list (inlined) append(X, Y) X Y α list, if X, Y : α list (inlined)

Figure 3: Summary of the standard library; see standard.mly for details

Once equipped with a rudimentary module system (§5.1), parameterization (§5.2), and inlining (§5.3), it is straightforward to propose a collection of commonly used definitions, such as options, sequences, lists, and so on. This standard library is joined, by default, with every grammar specification. A summary of the nonterminal symbols offered by the standard library appears in Figure 3. See also the short-hands documented in Figure 2.

By relying on the standard library, a client module can concisely define more elaborate notions. For instance, the following rule:

%inline plist(X): | xs = loption(delimited(LPAREN, separated_nonempty_list(COMMA, X), RPAREN)) { xs }

causes plist(X) to recognize a list of X’s, where the empty list is represented by the empty string, and a non-empty list is delimited with parentheses and comma-separated.

The standard library is stored in a file named standard.mly, which is embedded inside Menhir when it is built. The command line switch --no-stdlib instructs Menhir to not load the standard library.

The meaning of the symbols defined in the standard library (Figure 3) should be clear in most cases. Yet, the symbols endrule(X) and midrule(X) deserve an explanation. Both take an argument X, which typically will be instantiated with an anonymous rule (§4.2.4). Both are defined as a synonym for X. In both cases, this allows placing an anonymous subrule in the middle of a rule.

For instance, the following is a well-formed production:

|

This production consists of three producers, namely cat and endrule(dog { OCaml code1 }) and cow, and a semantic action { OCaml code2 }. Because endrule(X) is declared as an %inline synonym for X, the expansion of anonymous rules (§4.2.4), followed with the expansion of %inline symbols (§5.3), transforms the above production into the following:

|

Note that OCaml code1 moves to the end of the rule, which means that this code is executed only after cat, dog and cow have been recognized. In this example, the use of endrule is rather pointless, as the expanded code is more concise and clearer than the original code. Still, endrule can be useful when its actual argument is an anonymous rule with multiple branches.

midrule is used in exactly the same way as endrule, but its expansion is different. For instance, the following is a well-formed production:

|

(There is no dog in this example; this is intentional.) Because midrule(X) is a synonym for X, but is not declared %inline, the expansion of anonymous rules (§4.2.4), followed with the expansion of %inline symbols (§5.3), transforms the above production into the following:

|

where the fresh nonterminal symbol xxx is separately defined by the rule xxx: { OCaml code1 } . Thus, xxx recognizes the empty string, and as soon as it is recognized, OCaml code1 is executed. This is known as a “mid-rule action”.

When a shift/reduce or reduce/reduce conflict is detected, it is classified as either benign, if it can be resolved by consulting user-supplied precedence declarations, or severe, if it cannot. Benign conflicts are not reported. Severe conflicts are reported and, if the --explain switch is on, explained.

A shift/reduce conflict involves a single token (the one that one might wish to shift) and one or more productions (those that one might wish to reduce). When such a conflict is detected, the precedence level (§4.1.4, §4.2.1) of these entities are looked up and compared as follows:

In either of these cases, the conflict is considered benign. Otherwise, it is considered severe. Note that a reduce/reduce conflict is always considered severe, unless it happens to be subsumed by a benign multi-way shift/reduce conflict (item 3 above).

When the --dump switch is on, a description of the automaton is written to the .automaton file. Severe conflicts are shown as part of this description. Fortunately, there is also a way of understanding conflicts in terms of the grammar, rather than in terms of the automaton. When the --explain switch is on, a textual explanation is written to the .conflicts file.

Not all conflicts are explained in this file: instead, only one conflict per automaton state is explained. This is done partly in the interest of brevity, but also because Pager’s algorithm can create artificial conflicts in a state that already contains a true LR(1) conflict; thus, one cannot hope in general to explain all of the conflicts that appear in the automaton. As a result of this policy, once all conflicts explained in the .conflicts file have been fixed, one might need to run Menhir again to produce yet more conflict explanations.

%token IF THEN ELSE %start < expression > expression %% expression: | … | IF b = expression THEN e = expression { … } | IF b = expression THEN e = expression ELSE f = expression { … } | …

Figure 4: Basic example of a shift/reduce conflict

Figure 4 shows a grammar specification with a typical shift/reduce conflict. When this specification is analyzed, the conflict is detected, and an explanation is written to the .conflicts file. The explanation first indicates in which state the conflict lies by showing how that state is reached. Here, it is reached after recognizing the following string of terminal and nonterminal symbols—the conflict string:

IF expression THEN IF expression THEN expression

Allowing the conflict string to contain both nonterminal and terminal symbols usually makes it shorter and more readable. If desired, a conflict string composed purely of terminal symbols could be obtained by replacing each occurrence of a nonterminal symbol N with an arbitrary N-sentence.

The conflict string can be thought of as a path that leads from one of the automaton’s start states to the conflict state. When multiple such paths exist, the one that is displayed is chosen shortest. Nevertheless, it may sometimes be quite long. In that case, artificially (and temporarily) declaring some existing nonterminal symbols to be start symbols has the effect of adding new start states to the automaton and can help produce shorter conflict strings. Here, expression was declared to be a start symbol, which is why the conflict string is quite short.

In addition to the conflict string, the .conflicts file also states that the conflict token is ELSE. That is, when the automaton has recognized the conflict string and when the lookahead token (the next token on the input stream) is ELSE, a conflict arises. A conflict corresponds to a choice: the automaton is faced with several possible actions, and does not know which one should be taken. This indicates that the grammar is not LR(1). The grammar may or may not be inherently ambiguous.

In our example, the conflict string and the conflict token are enough to understand why there is a conflict: when two IF constructs are nested, it is ambiguous which of the two constructs the ELSE branch should be associated with. Nevertheless, the .conflicts file provides further information: it explicitly shows that there exists a conflict, by proving that two distinct actions are possible. Here, one of these actions consists in shifting, while the other consists in reducing: this is a shift/reduce conflict.

A proof takes the form of a partial derivation tree whose fringe begins with the conflict string, followed by the conflict token. A derivation tree is a tree whose nodes are labeled with symbols. The root node carries a start symbol. A node that carries a terminal symbol is considered a leaf, and has no children. A node that carries a nonterminal symbol N either is considered a leaf, and has no children; or is not considered a leaf, and has n children, where n≥ 0, labeled x1,…,xn, where N → x1,…,xn is a production. The fringe of a partial derivation tree is the string of terminal and nonterminal symbols carried by the tree’s leaves. A string of terminal and nonterminal symbols that is the fringe of some partial derivation tree is a sentential form.

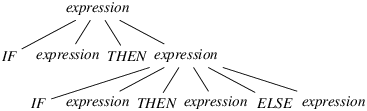

Figure 5: A partial derivation tree that justifies shifting

expression IF expression THEN expression IF expression THEN expression . ELSE expression

Figure 6: A textual version of the tree in Figure 5

In our example, the proof that shifting is possible is the derivation tree shown in Figures 5 and 6. At the root of the tree is the grammar’s start symbol, expression. This symbol develops into the string IF expression THEN expression, which forms the tree’s second level. The second occurrence of expression in that string develops into IF expression THEN expression ELSE expression, which forms the tree’s last level. The tree’s fringe, a sentential form, is the string IF expression THEN IF expression THEN expression ELSE expression. As announced earlier, it begins with the conflict string IF expression THEN IF expression THEN expression, followed with the conflict token ELSE.

In Figure 6, the end of the conflict string is materialized with a dot. Note that this dot does not occupy the rightmost position in the tree’s last level. In other words, the conflict token (ELSE) itself occurs on the tree’s last level. In practical terms, this means that, after the automaton has recognized the conflict string and peeked at the conflict token, it makes sense for it to shift that token.

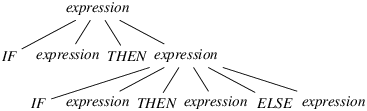

Figure 7: A partial derivation tree that justifies reducing

expression IF expression THEN expression ELSE expression // lookahead token appears IF expression THEN expression .

Figure 8: A textual version of the tree in Figure 7

In our example, the proof that reducing is possible is the derivation tree shown in Figures 7 and 8. Again, the sentential form found at the fringe of the tree begins with the conflict string, followed with the conflict token.

Again, in Figure 8, the end of the conflict string is materialized with a dot. Note that, this time, the dot occupies the rightmost position in the tree’s last level. In other words, the conflict token (ELSE) appeared on an earlier level (here, on the second level). This fact is emphasized by the comment // lookahead token appears found at the second level. In practical terms, this means that, after the automaton has recognized the conflict string and peeked at the conflict token, it makes sense for it to reduce the production that corresponds to the tree’s last level—here, the production is expression → IF expression THEN expression.

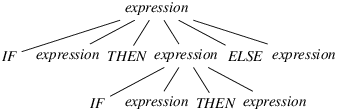

Figures 9 and 10 show a partial derivation tree that justifies reduction in a more complex situation. (This derivation tree is relative to a grammar that is not shown.) Here, the conflict string is DATA UIDENT EQUALS UIDENT; the conflict token is LIDENT. It is quite clear that the fringe of the tree begins with the conflict string. However, in this case, the fringe does not explicitly exhibit the conflict token. Let us examine the tree more closely and answer the question: following UIDENT, what’s the next terminal symbol on the fringe?

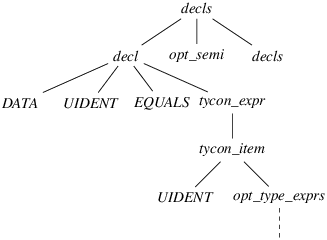

Figure 9: A partial derivation tree that justifies reducing

decls decl opt_semi decls // lookahead token appears because opt_semi can vanish and decls can begin with LIDENT DATA UIDENT EQUALS tycon_expr // lookahead token is inherited tycon_item // lookahead token is inherited UIDENT opt_type_exprs // lookahead token is inherited .

Figure 10: A textual version of the tree in Figure 9

First, note that opt_type_exprs is not a leaf node, even though it has no children. The grammar contains the production opt_type_exprs → є: the nonterminal symbol opt_type_exprs develops to the empty string. (This is made clear in Figure 10, where a single dot appears immediately below opt_type_exprs.) Thus, opt_type_exprs is not part of the fringe.

Next, note that opt_type_exprs is the rightmost symbol within its level. Thus, in order to find the next symbol on the fringe, we have to look up one level. This is the meaning of the comment // lookahead token is inherited. Similarly, tycon_item and tycon_expr appear rightmost within their level, so we again have to look further up.

This brings us back to the tree’s second level. There, decl is not the rightmost symbol: next to it, we find opt_semi and decls. Does this mean that opt_semi is the next symbol on the fringe? Yes and no. opt_semi is a nonterminal symbol, but we are really interested in finding out what the next terminal symbol on the fringe could be. The partial derivation tree shown in Figures 9 and 10 does not explicitly answer this question. In order to answer it, we need to know more about opt_semi and decls.

Here, opt_semi stands (as one might have guessed) for an optional semicolon, so the grammar contains a production opt_semi → є. This is indicated by the comment // opt_semi can vanish. (Nonterminal symbols that generate є are also said to be nullable.) Thus, one could choose to turn this partial derivation tree into a larger one by developing opt_semi into є, making it a non-leaf node. That would yield a new partial derivation tree where the next symbol on the fringe, following UIDENT, is decls.

Now, what about decls? Again, it is a nonterminal symbol, and we are really interested in finding out what the next terminal symbol on the fringe could be. Again, we need to imagine how this partial derivation tree could be turned into a larger one by developing decls. Here, the grammar happens to contain a production of the form decls → LIDENT … This is indicated by the comment // decls can begin with LIDENT. Thus, by developing decls, it is possible to construct a partial derivation tree where the next symbol on the fringe, following UIDENT, is LIDENT. This is precisely the conflict token.

To sum up, there exists a partial derivation tree whose fringe begins with the conflict string, followed with the conflict token. Furthermore, in that derivation tree, the dot occupies the rightmost position in the last level. As in our previous example, this means that, after the automaton has recognized the conflict string and peeked at the conflict token, it makes sense for it to reduce the production that corresponds to the tree’s last level—here, the production is opt_type_exprs → є.

Understanding conflicts requires comparing two (or more) derivation trees. It is frequent for these trees to exhibit a common factor, that is, to exhibit identical structure near the top of the tree, and to differ only below a specific node. Manual identification of that node can be tedious, so Menhir performs this work automatically. When explaining a n-way conflict, it first displays the greatest common factor of the n derivation trees. A question mark symbol (?) is used to identify the node where the trees begin to differ. Then, Menhir displays each of the n derivation trees, without their common factor – that is, it displays n sub-trees that actually begin to differ at the root. This should make visual comparisons significantly easier.

It is unspecified how severe conflicts are resolved. Menhir attempts to mimic ocamlyacc’s specification, that is, to resolve shift/reduce conflicts in favor of shifting, and to resolve reduce/reduce conflicts in favor of the production that textually appears earliest in the grammar specification. However, this specification is inconsistent in case of three-way conflicts, that is, conflicts that simultaneously involve a shift action and several reduction actions. Furthermore, textual precedence can be undefined when the grammar specification is split over multiple modules. In short, Menhir’s philosophy is that

so you should not care how they are resolved.

Menhir’s treatment of the end of the token stream is (believed to be) fully compatible with ocamlyacc’s. Yet, Menhir attempts to be more user-friendly by warning about a class of so-called “end-of-stream conflicts”.

In many textbooks on parsing, it is assumed that the lexical analyzer, which produces the token stream, produces a special token, written #, to signal that the end of the token stream has been reached. A parser generator can take advantage of this by transforming the grammar: for each start symbol S in the original grammar, a new start symbol S’ is defined, together with the production S′→ S# . The symbol S is no longer a start symbol in the new grammar. This means that the parser will accept a sentence derived from S only if it is immediately followed by the end of the token stream.

This approach has the advantage of simplicity. However, ocamlyacc and Menhir do not follow it, for several reasons. Perhaps the most convincing one is that it is not flexible enough: sometimes, it is desirable to recognize a sentence derived from S, without requiring that it be followed by the end of the token stream: this is the case, for instance, when reading commands, one by one, on the standard input channel. In that case, there is no end of stream: the token stream is conceptually infinite. Furthermore, after a command has been recognized, we do not wish to examine the next token, because doing so might cause the program to block, waiting for more input.

In short, ocamlyacc and Menhir’s approach is to recognize a sentence derived from S and to not look, if possible, at what follows. However, this is possible only if the definition of S is such that the end of an S-sentence is identifiable without knowledge of the lookahead token. When the definition of S does not satisfy this criterion, and end-of-stream conflict arises: after a potential S-sentence has been read, there can be a tension between consulting the next token, in order to determine whether the sentence is continued, and not consulting the next token, because the sentence might be over and whatever follows should not be read. Menhir warns about end-of-stream conflicts, whereas ocamlyacc does not.

Technically, Menhir proceeds as follows. A # symbol is introduced. It is, however, only a pseudo-token: it is never produced by the lexical analyzer. For each start symbol S in the original grammar, a new start symbol S’ is defined, together with the production S′→ S. The corresponding start state of the LR(1) automaton is composed of the LR(1) item S′ → . S [# ]. That is, the pseudo-token # initially appears in the lookahead set, indicating that we expect to be done after recognizing an S-sentence. During the construction of the LR(1) automaton, this lookahead set is inherited by other items, with the effect that, in the end, the automaton has:

A state of the automaton has a reduce action on # if, in that state, an S-sentence has been read, so that the job is potentially finished. A state has a shift or reduce action on a physical token if, in that state, more tokens potentially need to be read before an S-sentence is recognized. If a state has a reduce action on #, then that action should be taken without requesting the next token from the lexical analyzer. On the other hand, if a state has a shift or reduce action on a physical token, then the lookahead token must be consulted in order to determine if that action should be taken.

%token < int > INT %token PLUS TIMES %left PLUS %left TIMES %start < int > expr %% expr: | i = INT { i } | e1 = expr PLUS e2 = expr { e1 + e2 } | e1 = expr TIMES e2 = expr { e1 * e2 }

Figure 11: Basic example of an end-of-stream conflict

State 6: expr -> expr . PLUS expr [ # TIMES PLUS ] expr -> expr PLUS expr . [ # TIMES PLUS ] expr -> expr . TIMES expr [ # TIMES PLUS ] -- On TIMES shift to state 3 -- On # PLUS reduce production expr -> expr PLUS expr State 4: expr -> expr . PLUS expr [ # TIMES PLUS ] expr -> expr . TIMES expr [ # TIMES PLUS ] expr -> expr TIMES expr . [ # TIMES PLUS ] -- On # TIMES PLUS reduce production expr -> expr TIMES expr State 2: expr' -> expr . [ # ] expr -> expr . PLUS expr [ # TIMES PLUS ] expr -> expr . TIMES expr [ # TIMES PLUS ] -- On TIMES shift to state 3 -- On PLUS shift to state 5 -- On # accept expr

Figure 12: Part of an LR automaton for the grammar in Figure 11

… %token END %start < int > main // instead of expr %% main: | e = expr END { e } expr: | …

Figure 13: Fixing the grammar specification in Figure 11

An end-of-stream conflict arises when a state has distinct actions on # and on at least one physical token. In short, this means that the end of an S-sentence cannot be unambiguously identified without examining one extra token. Menhir’s default behavior, in that case, is to suppress the action on #, so that more input is always requested.

Figure 11 shows a grammar that has end-of-stream conflicts. When this grammar is processed, Menhir warns about these conflicts, and further warns that expr is never accepted. Let us explain.

Part of the corresponding automaton, as described in the .automaton file, is shown in Figure 12. Explanations at the end of the .automaton file (not shown) point out that states 6 and 2 have an end-of-stream conflict. Indeed, both states have distinct actions on # and on the physical token TIMES. It is interesting to note that, even though state 4 has actions on # and on physical tokens, it does not have an end-of-stream conflict. This is because the action taken in state 4 is always to reduce the production expr → expr TIMES expr, regardless of the lookahead token.

By default, Menhir produces a parser where end-of-stream conflicts are resolved in favor of looking ahead: that is, the problematic reduce actions on # are suppressed. This means, in particular, that the accept action in state 2, which corresponds to reducing the production expr → expr’, is suppressed. This explains why the symbol expr is never accepted: because expressions do not have an unambiguous end marker, the parser will always request one more token and will never stop.

In order to avoid this end-of-stream conflict, the standard solution is to introduce a new token, say END, and to use it as an end marker for expressions. The END token could be generated by the lexical analyzer when it encounters the actual end of stream, or it could correspond to a piece of concrete syntax, say, a line feed character, a semicolon, or an end keyword. The solution is shown in Figure 13.

When an ocamllex-generated lexical analyzer produces a token, it updates

two fields, named lex_start_p and lex_curr_p, in its environment

record, whose type is Lexing.lexbuf. Each of these fields holds a value

of type Lexing.position. Together, they represent the token’s start and

end positions within the text that is being scanned. These fields are read by

Menhir after calling the lexical analyzer, so it is the lexical

analyzer’s responsibility to correctly set these fields.

A position consists

mainly of an offset (the position’s pos_cnum field), but also holds

information about the current file name, the current line number, and the

current offset within the current line. (Not all ocamllex-generated analyzers

keep this extra information up to date. This must be explicitly programmed by

the author of the lexical analyzer.)

$startposstart position of the first symbol in the production’s right-hand side, if there is one; end position of the most recently parsed symbol, otherwise $endposend position of the last symbol in the production’s right-hand side, if there is one; end position of the most recently parsed symbol, otherwise $startpos($i | id)start position of the symbol named $i or id$endpos($i | id)end position of the symbol named $i or id$symbolstartpos start position of the leftmost symbol id such that $startpos(id)!=$endpos(id);if there is no such symbol, $endpos$startofs$endofs$startofs($i | id)same as above, but produce an integer offset instead of a position $endofs($i | id)$symbolstartofs$locstands for the pair ($startpos, $endpos)$loc(id)stands for the pair ($startpos(id), $endpos(id))$slocstands for the pair ($symbolstartpos, $endpos)

Figure 14: Position-related keywords

symbol_start_pos()$symbolstartpos symbol_end_pos()$endposrhs_start_pos i$startpos($i)(1 ≤ i ≤ n) rhs_end_pos i$endpos($i)(1 ≤ i ≤ n) symbol_start()$symbolstartofssymbol_end()$endofsrhs_start i$startofs($i)(1 ≤ i ≤ n) rhs_end i$endofs($i)(1 ≤ i ≤ n)

Figure 15: Translating position-related incantations from ocamlyacc to Menhir

This mechanism allows associating pairs of positions with terminal symbols. If desired, Menhir automatically extends it to nonterminal symbols as well. That is, it offers a mechanism for associating pairs of positions with terminal or nonterminal symbols. This is done by making a set of keywords available to semantic actions (Figure 14). These keywords are not available outside of a semantic action: in particular, they cannot be used within an OCaml header.

OCaml’s standard library module Parsing is deprecated. The functions that it offers can be called, but will return dummy positions.

We remark that, if the current production has an empty right-hand side, then

$startpos and $endpos are equal, and (by convention) are the end

position of the most recently parsed symbol (that is, the symbol that happens

to be on top of the automaton’s stack when this production is reduced). If

the current production has a nonempty right-hand side, then

$startpos is the same as $startpos($1) and

$endpos is the same as $endpos($n),

where n is the length of the right-hand side.

More generally, if the current production has matched a sentence of length

zero, then $startpos and $endpos will be equal, and conversely.

The position $startpos is sometimes “further towards the left” than

one would like. For example, in the following production:

declaration: modifier? variable { $startpos }

the keyword $startpos represents the start position of the optional

modifier modifier?. If this modifier turns out to be absent, then its

start position is (by definition) the end position of the most recently parsed

symbol. This may not be what is desired: perhaps the user would prefer in this

case to use the start position of the symbol variable. This is achieved by

using $symbolstartpos instead of $startpos. By definition,

$symbolstartpos is the start position of the leftmost symbol whose

start and end positions differ. In this example, the computation of

$symbolstartpos skips the absent modifier, whose start and end

positions coincide, and returns the start position of the symbol variable

(assuming this symbol has distinct start and end positions).

There is no keyword $symbolendpos. Indeed, the problem

with $startpos is due to the asymmetry in the definition

of $startpos and $endpos in the case of an empty right-hand

side, and does not affect $endpos.

The positions computed by Menhir are exactly the same as those computed by

ocamlyacc1. More precisely, Figure 15 sums up how

to translate a call to the Parsing module, as used in an ocamlyacc grammar, to a Menhir keyword.

We note that Menhir’s $startpos does not appear in the right-hand

column in Figure 15. In other words, Menhir’s $startpos

does not correspond exactly to any of the ocamlyacc function calls.

An exact ocamlyacc equivalent of $startpos is rhs_start_pos 1

if the current production has a nonempty right-hand side and

symbol_start_pos() if it has an empty right-hand side.

Finally, we remark that Menhir’s %inline keyword (§5.3) does not affect the computation of positions. The same positions are computed, regardless of where %inline keywords are placed.

When --interpret is set, Menhir no longer behaves as a compiler. Instead, it acts as an interpreter. That is, it repeatedly:

This process stops when the end of the input channel is reached.

The syntax of sentences is as follows:

| sentence | ::= | [ lid : ] uid … uid \n |

Less formally, a sentence is a sequence of zero or more terminal symbols (uid’s), separated with whitespace, terminated with a newline character, and optionally preceded with a non-terminal start symbol (lid). This non-terminal symbol can be omitted if, and only if, the grammar only has one start symbol.

For instance, here are four valid sentences for the grammar of arithmetic expressions found in the directory demos/calc:

main: INT PLUS INT EOL INT PLUS INT INT PLUS PLUS INT EOL INT PLUS PLUS

In the first sentence, the start symbol main was explicitly specified. In the other sentences, it was omitted, which is permitted, because this grammar has no start symbol other than main. The first sentence is a stream of four terminal symbols, namely INT, PLUS, INT, and EOL. These terminal symbols must be provided under their symbolic names. Writing, say, “12+32\n” instead of INT PLUS INT EOL is not permitted. Menhir would not be able to make sense of such a concrete notation, since it does not have a lexer for it.

As soon as Menhir is able to read a complete sentence off the standard input channel (that is, as soon as it finds the newline character that ends the sentence), it parses the sentence according to whichever grammar was specified on the command line, and displays an outcome.

An outcome is one of the following:

When --interpret-show-cst is set, each ACCEPT outcome is followed with a concrete syntax tree. A concrete syntax tree is either a leaf or a node. A leaf is either a terminal symbol or error. A node is annotated with a non-terminal symbol, and carries a sequence of immediate descendants that correspond to a valid expansion of this non-terminal symbol. Menhir’s notation for concrete syntax trees is as follows:

| cst | ::= | uid |

| error | ||

| [ lid : cst … cst ] |

For instance, if one wished to parse the example sentences of §8.1 using the grammar of arithmetic expressions in demos/calc, one could invoke Menhir as follows:

$ menhir --interpret --interpret-show-cst demos/calc/parser.mly main: INT PLUS INT EOL ACCEPT [main: [expr: [expr: INT] PLUS [expr: INT]] EOL] INT PLUS INT OVERSHOOT INT PLUS PLUS INT EOL REJECT INT PLUS PLUS REJECT

(Here, Menhir’s input—the sentences provided by the user on the standard input channel— is shown intermixed with Menhir’s output—the outcomes printed by Menhir on the standard output channel.) The first sentence is valid, and accepted; a concrete syntax tree is displayed. The second sentence is incomplete, because the grammar specifies that a valid expansion of main ends with the terminal symbol EOL; hence, the outcome is OVERSHOOT. The third sentence is invalid, because of the repeated occurrence of the terminal symbol PLUS; the outcome is REJECT. The fourth sentence, a prefix of the third one, is rejected for the same reason.

Using Menhir as an interpreter offers an easy way of debugging your grammar. For instance, if one wished to check that addition is considered left-associative, as requested by the %left directive found in the file demos/calc/parser.mly, one could submit the following sentence:

$ ./menhir --interpret --interpret-show-cst ../demos/calc/parser.mly INT PLUS INT PLUS INT EOL ACCEPT [main: [expr: [expr: [expr: INT] PLUS [expr: INT]] PLUS [expr: INT]] EOL ]