THE SEMIOTICS OF THE WEB*

Philippe Codognet

INRIA -Rocquencourt

B. P. 105, 78 153 Le Chesnay Cedex, FRANCE

and

Université Paris 6

LIP6, case 169

4, Place Jussieu, 75005 PARIS, France

Philippe.Codognet@lip6.fr

Avant d'’être séduite par Zeus sous la forme d'’un serpent, et de concevoir par lui Dionysos, Perséphone, laissée par Déméter dans la grotte de Cyane, avait commencé un tissage sur lequel serait représenté l'’univers entier.

I am not sure that the web weaved by Persephone in this Orphic tale, cited in exergue of Michel Serres’ La communication , is what we are currently used to call the World Wide Web. Our computer web on the internet is nevertheless akin Persephone’s in its aims : representing and covering the entire universe. Our learned ignorance is conceiving an infinite virtual world whose center is everywhere and circumference nowhere ...

I. Introduction

We will try in this paper to develop a semiotic analysis of computer-based communication, and especially human-to-human communication through an electronic medium. Semiotics is a very interesting and powerful tool in order to rephrase information theory and computer science and shed a new light on this global phenomenon. Along with semiotics, we will nevertheless also base our study on a more classical historical analysis, sometimes verging on historicism, to point out the deep roots of contemporary computer communication. We will therefore retrace the history of the ‘universal language of computer’, that is, binary notation, and link it to that of the ‘universal language of images’, that is, a long tradition in the history of ideas going back to Cicero’s Art of Memory and various Renaissance curiosities.

Computers are artefacts aimed at storing and manipulating information -- information being basically anything that could be algorithmically generated -- encoded in various ways. Information theory can be thought of as some sort of simplified or idealized semiotics : a cyphering/decyphering algorithm represents the interpretation process used to decode some signifier (encoded information) into some computable signified (meaningful information) to be fed to a subsequent processing step. As could be this process, semiosis is, of course, unlimited.

Communication between computers follows the same scheme. As data have to be transmitted through some external (usually analog) medium, a further encryption scheme (semiotic system) has to be devised and applied : the communication protocol. The current success of the World Wide Web protocol on the internet (http) is mainly due to its ability to manipulate images and sound in addition to simple alphanumeric text. As humans communicate through this medium and exchange cultural signs, some problematic issues should be raised. Indeed, the human being has to decompose himself as a collection of transmissible and immediatly understandable signs in order to be communicable, and this drift can be seen today in personal Web pages or electronic mail communications. The self is mutilated and disintegrated into conventional signs, in a deeper and much more dramatic way than oral communication. The success of the Web goes with its semantic poverty, and is heading toward some "zero degree" of communication. The Entropy Principle warns us that we are converging towards a stable state where everyone will be connected and fully informed of a homogeneous and therefore null content.

II. The Universal Language of Machines

The success of the computer as a universal information-processing machine lies essentially in the fact that there exists a universal language in which many different kinds of information can be encoded and that this language can be mechanized. This would concretize the well-known dream of Leibniz of a universal language that would be both a lingua characteristica, allowing the ‘’perfect’’ description of knowledge by exhibiting the ‘’real characters’’ of concepts and things, and a calculus ratiocinator, making it possible for the mechanization of reasoning. If such a language was employed, Leibniz said, errors in reasoning would be avoided, and endless philosophical discussions would cease at once by having all philosophers sit aroung a table and say ‘’calculemus’’. This would indeed reify Thomas Hobbes motto : ‘’cogitatio est computatio’’.

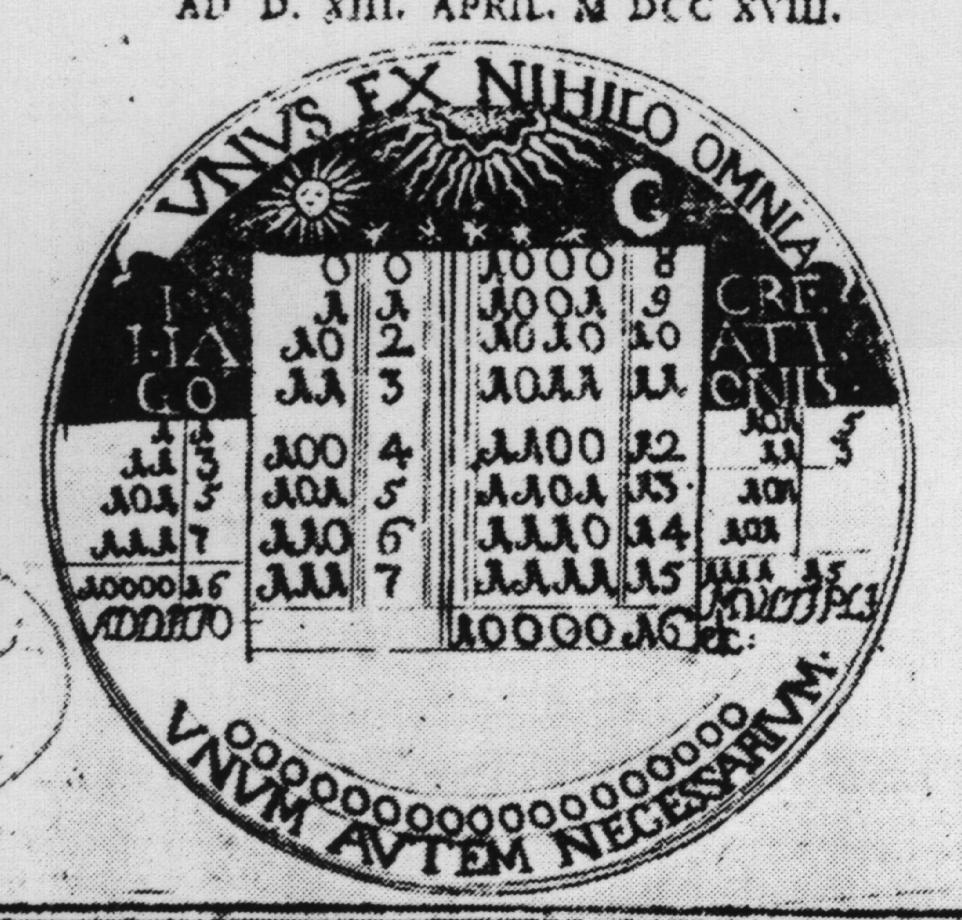

Leibniz’s medallion for the Duke of Brunswick

from the Postdoctoral Thesis by Johann Bernard Wiedeburg of Jena (1718)

Surprisingly enough - or maybe not - Leibniz is also commonly credited with the invention of the universal language of computers : binary notation. It seems however that the binary notation was originally used circa 1600 by Thomas Harriot, the English astronomer, famous for speaking about the " strange spotednesse of the moon " and being unable to associate it with the mountains and seas of the planet. A few months later, Galileo was the first to actually ‘’see’’ the relief of the moon, in all likelihood because of his training in fine arts (geometry of shadow casting and chiarioscuro), making this event a landmark in the mutual influence of Art and Science . Back to binary notation, Leibniz himself found a predecessor in Abdallah Beidhawy, an Arab scholar of the thirteenth century. A few other authors also proposed binary notations during the seventeenth century, but it was not until its ‘’discovery’’ and publication by Leibniz in 1703 that it started a growing interest in non-decimal numerical systems. Leibniz’s invention can be traced back to 1697, in a letter to the Duke of Brunswick detailing the design of a medallion (see figure), but he delayed its publication until finding an interesting application. The one he choose was the explanation of the Fu-Hi figures, the hexagrams of the I-Ching, or book of changes, from ancient China, that have been communicated to him in 1700 by the Father Bouvet, a jesuit missionary in China. Two centuries and a half later, binary notation found another application with a much broader impact : digital computers. Altrough the first computer, the ENIAC machine created in 1946, made use of a notation that was a sort of hybrid between decimal and binary, the application of full binary notation was generalized in the following years, after the Burk-Goldstine-Von Neuman Report of 1947 :

" An additional point that deserves emphasis is this : An important part of the machine is not arithmetical, but logical in nature. Now logics, being a yes-no system, is fundamentally binary. Therefore, a binary arrangement of the arithmetical organs contributes very significantly towards a more homogeneous machine, which can be better integrated and is more efficient. "

This report indeed defined the so-called IAS computer design, which formed the basis of most of the systems from the early fifties, that were indeed the first purely binary machines : IBM 701 1952, ILLIAC, University of Illinois 1952, MANIAC, Los Alamos Scientific Laboratory 1952, AVIDAC, Argonne National Laboratory 1953, BESK, Sweden 1953, BESM, Moscow 1955, WEIZAC, Israel 1955, DASK, Danemark 1957, etc .

Computers can compute, for sure, and using binary notation for representing numbers is certainly of great interest, but there is nevertheless another key issue for making them able to process higher-level information. The first step was to code alphabetical symbols, therefore moving from the realms of numbers to the realms of words. The first binary encoding of alphanumeric characters was indeed designed nearly a century ago, by Giuseppe Peano, the very Peano responsible for the first axiomatization of arithmetics. He designed an abstract stenographic machine based on a binary encoding of all the syllables of the Italian language. Along with the phonemes, coded with 16 bits (allowing therefore 65 536 combinations), there was an encoding of the 25 letters of the (Italian) alphabet and the 10 digits. Peano's code, perhaps too technologically advanced for its time or simply too exotic, passed unnoticed and has been long forgotten. Nowadays, computers employ the ASCII encoding of letters and numbers that represent each character with 7 bits (or 8 for extended ASCII, which includes accentuated letters). Being able to handle numbers and letters, the computer soon became the perfect data-processing machine, the flawless artifact of the information technology age.

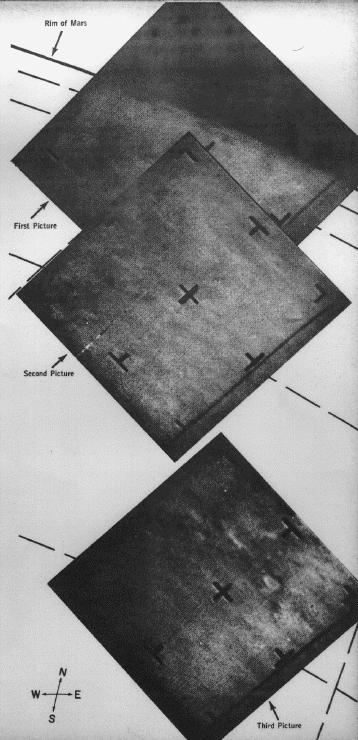

Another landmark, however, is crucial: the digitalization and, of course, binarization of pictures, which marked the opening of the realms of images to the computer. This technology was, to the best of my knowledge, first revealed to the general public in the mid 60's, during the heyday of space exploration. Time magazine, relating the Mariner IV mission to Mars (whose TV camera transmitted back the first pictures ever of the surface of the red planet) wrote :

" Each picture was made up of 200 lines – compared with 525 lines of commercial TV screens. And each line was made up of 200 dots. The pictures were held on the tube for 25 seconds while they were scanned by an electron beam that responded to the light intensity of each dot. This was translated into numerical code with shadings running from zero for white to 63 for deepest black. The dot numbers were recorded in binary code of ones and zeros, the language of computers. Thus white (0) was 000000, black (63) showed up as 111111. Each picture – actually 40,000 tiny dots encoded in 240,000 bits of binary code – was stored on magnetic tape for transmission to the Earth after Mariner had passed Mars. More complex in some respects than the direct transmission of video data that brought pictures back from the moon, the computer code was necessary to get information accurately all the way back from Mars to Earth. "

MarinerIV pictures from Mars, Time Magazine 86, July 23th, 1965.

Print-out of Mariner IV pictures as binary numbers

Time magazine 86, July 23th, 1965.

As a matter of comparision, modern computers might handle images composed of millions of pixels (‘’small dots’’) with millions of colors, requiring about 100 times more bits of binary codes.

Indeed, this ability to manipulate pictorial information proved to be the main reason for the current explosion of cyberspace and the internet. Without images, with human-to-computer and human-to-human (through computer) interactions limited to the alphanumeric set, electronic communication was circumscribed to computer professionals and a few crucial business/military applications. Do not forget that the ancestor of the Internet was the military-funded Arpanet... The widening of the network to mainstream society, with the exponential growth and mediatization of the World Wide Web, could only emerge if electronically exchanged signs could be at the same time both more complex, to hold more information more concisely, and less dry, in order to be more pleasing aesthetically. Let us now rewind history a little and look back to the tradition of using pictorial knowledge in science and philosophy.

III. The Power of Images

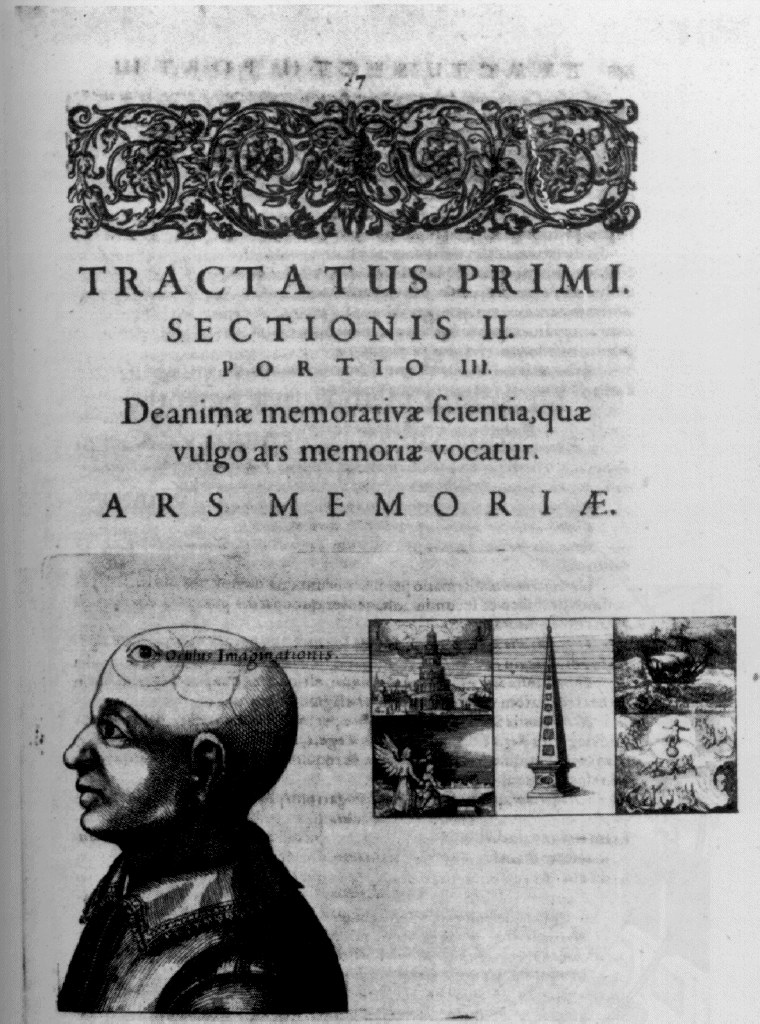

The use of images to represent knowledge and synthetize information has a long background in the Western history of ideas, particularly in the antique tradition of the Art of Memory, a strand of classical studies going back to Cicero and persisting until the late Renaissance. This discipline was concerned with mnemonics and the ability to memorize anything at will, at a time when paper and other writting-supports were rare and rhetoric a key discipline. Leibniz himself, definitively the filium Ariadne of our study, considered that scholarship or " perfect knowledge of the principle of all sciences and the art of applying them " could be divided into three equally important parts : the art of reasoning (logic), the art of inventing (combinatorics) and the art of memory (mnemonics). He even wrote an unpublished manuscript on the ars memoriae. The main idea of the ars memorativa is to organize one’s memory in ‘’places’’ organized into an imaginary architecture, e. g. the rooms of a house. This basic architecture must be well-known and familiar, in order to let oneself wander easily within it. Then, to remember particular sequences of things, one will populate these rooms with ‘’images’’ that should refer directly or indirectly to what has to be remembered.

Abbey memory system

from Johannes Rombech, Congestorius artificiosae memoriae (1520)

The main assumption here, the roots of which goes back obviously to Plato , is that (visual) images are easier to remember than words. With its emphasis on the power of images, this tradition, especially in its last incarnations such as Giordano Bruno or Leibniz, would therefore naturally lead to the notion of a perfect language based on images instead of words, as images ‘’speak more directly to the soul’’.

Robert Fludd, Ars Memoriae (1612)

It is interesting to note that this Platonic consideration of immediateness of pictures (as abstractions of ideas) has persisted up to our times, as shown by Saussure’s use of the drawing of a tree to illustrate the signified of the word ‘tree’ in the Cours de linguistique générale ...

Tomasso Campanella, a contemporary of Giordano Bruno, with similar -- if less definitive -- problems with the Inquisition, imagined in his famous book The City of the Sun a utopian ideal city enclosed by six concentric walls painted with images that would constitute an encyclopedia of all sciences, to be learned ‘’very easily’’ by children as of age 10.

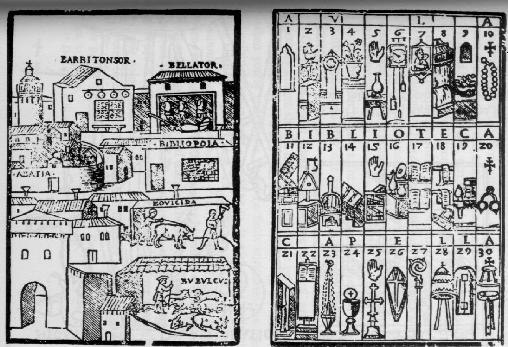

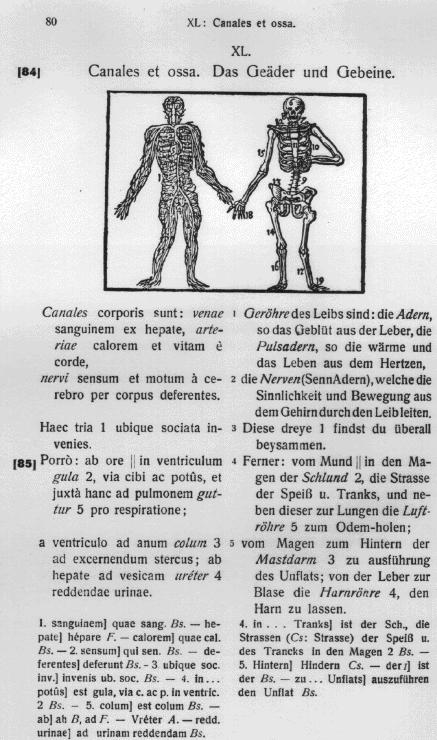

A few decades later, the Czech humanist Comenius (Jan Amos Komensky) implemented this dream in his Orbis sensualium pictus quadrilinguis (1658), " the painting and nomenclature of all the main things in the world and the main actions in life ", actually a pictorial dictionnary. Images are "the icons of all visible things in the world, to which, by appropriate means one could also reduce invisible things ". The philosophical alphabet of his global encyclopedia is an alphabet of images.

Comenius, Orbis sensualium pictus quadrilinguis (1658)

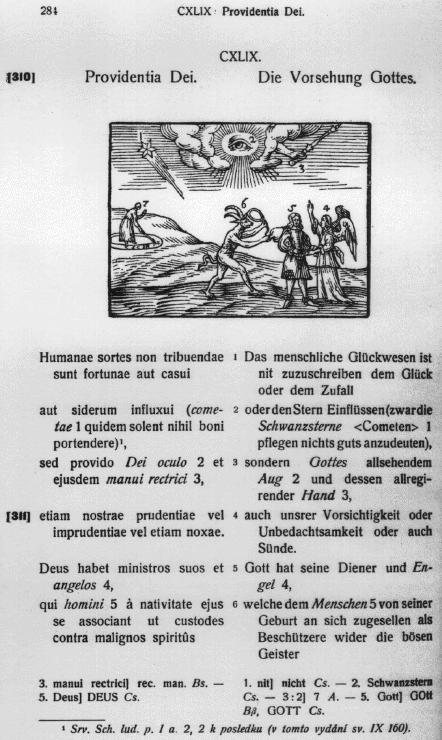

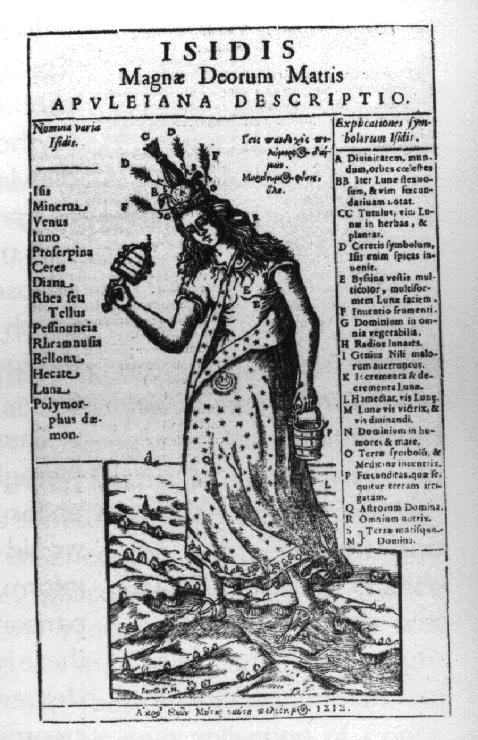

A very interesting device put to use by Comenius is to attach letters or numbers to parts of the image and to refer to those symbols in the text. He therefore had recourse to indexical signs to make the image work as a global pictorial diagram. At the same time, the Jesuit father Athanasius Kircher was using the same indexical device in his famous Oedipus Aegyptiacus (1652) and several others of his numerous writings.

Athanasius Kircher, Oedipus Aegyptiacus (1652)

![]()

Athanasius Kircher, Musurgia universalis (1650)

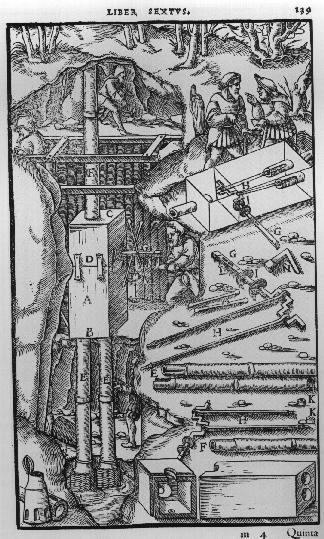

However, this utilisation of letters or numbers to decompose a picture and reference to a more detailed explanation could yet be traced back one century before. Indeed, the production of illustrated printed books " that would be called coffee-table books if they where published today " developed rapidly after 1520. An important part of such publications were technical books on various subjects such as architecture, metallurgy, hydraulics, mechanics, etc. The last quarter of the sixteenth century saw the printing of many ‘’theaters of machines’’ describing various artifacts with, in addition to the now classical drawing conventions built up by the engineers of the Renaissance, this ingenious device of naming parts and detailling them aside. See for instance the woodcut from Georg Bauer Agricola’s de re metallica (1556).

Georg Bauer Agricola de re metallica (1556)

The earliest example I have found came from a reprint of Vitruvius’ ten books on architecture, dated 1521.

Cesare Cesariano’s editon of Vitruvius, Ten Books on Architecture (1521)

At the end of the sixteenth century, this indexed split view technique was used by the Jesuits in various ways , for example in the paintings of martyrdom executed by Niccolò Circigani in various Jesuit churches in 1582-1583, such as the frescoes in the church of San Stefano Rotondo in Rome (1583).

Niccolo Circignani, Scene of Martyrdom, Rome, S. Stefano Rotondo

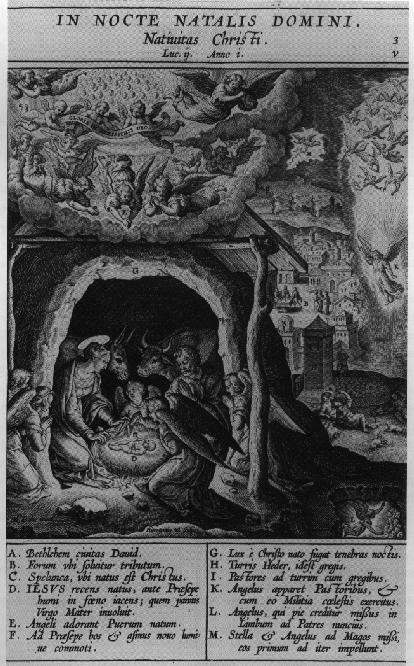

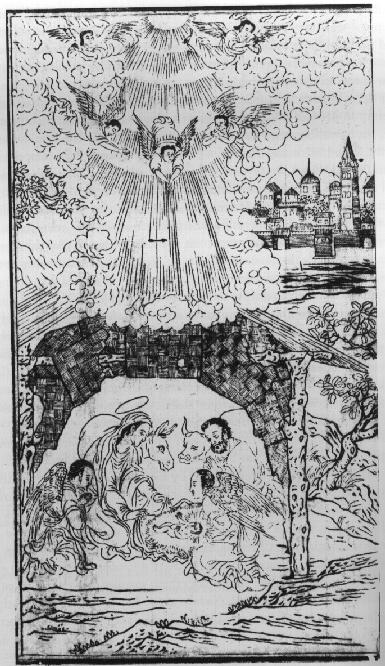

More importantly, this technique was put to use in Jerome Nadal’s Evangelicae historiae imagines (1593), a book for meditation and prayer consisting of 123 illustrations with " letters of the alphabet placed throughout the scene correspond[ing] to lettered captions of explanation underneath " . This text was heavily used by the Jesuit missionaries in China, and Chinese copies of this book have been made, with illustrations copied by local artists. It seems that for the Jesuits, pictures have been considered the best ‘’universal language’’ and Nadal’s famous book seems indeed to have merged the medieval tradition of illustrated meditation book, such as Pseudo-Bonaventura’s meditationes vitae Christi (late forteenth century), with the drawing conventions of Renaissance illustrations in technical books.

Martens De Vos, Nativity, engraved for

Hieronymus Nadal’s Evangelicae historiae imagines (1593)

Chinese woodcut copy of previous illustration in

Joao da Rocha’s Nien-zhu Gui-chen (1620)

Nearly identical to that of Comenius, the indexical device used in Nadal's book indeed corresponds to a primitive form of the indexical system that can be found today in the WWW...

IV. A Web of Icons, Indexes and Symbols

It is therefore not surprizing that when computers came to the realm of images, a new dimension was added to Cyberspace (literaly indeed, from 1D to 2D) and then the term ‘’Virtual Reality’’ started to be more than a daydream. We cannot investigate here the arguably profound impact of computers on image creation through computer graphics and virtual images. Rather, we will limit our study to the integration of pictures in electronic communication. Electronic mail, i.e. alphanumeric person-to-person communication on the internet and ‘’newsgroups’’, i.e. electronic dazibaos organized by fields of interest, are rather old stories and would never have started the current media trend for the internet and the cyber-everything by themselves only. The World Wide Web did so a couple of years ago, triggering some unconscious appeal for an electronic global world of pictures and images. Web pages are attractive and full of meaningful information - or so they seem. Surfing on the Web is worth the hours spent waiting in front of the computer while data is transmitted from the other side of the planet, or spent wandering through useless information on uninteresting subjects. Our purpose here will only be to use semiotics to analyse the Web as a communication tool and determine what classical concepts are reified in it.

Let us go back to Pierce’s classical classification of signs as Icons, Indexes and Symbols, which is very useful in understanding the different ways in which signs operate and semiosis is performed. Let us take Arthur Burk’s presentation of this trichotomy :

" We can best do this in term of the following examples : (1) the word ‘red’, as used in the English sentence, ‘the book is red’ ; (2) an act of pointing, used to call attention to some particular object, e.g. a tree ; (3) a scale drawing, used to communicate to a machinist the structure of a piece of machinery . All these are signs in the general sense in which the term is used by Pierce : each satisfies his definition of a sign as something which represents or signifies an object to some interpretant. (...) A sign represents its object to its interpretant symbolically, indexically, or iconically according to whether it does so (1) by being associated with its object by a conventional rule used by the interpretant (as in the case of ‘red’) ; (2) by being in existential relation with its object (as in the case of the act of pointing) ; or (3) by exhibiting its object (as in the case of the diagram). "

Let us now try to use those notions for analysing the main features of Web pages. Web pages are so-called hypertexts, that is, texts with some of their components (words or sentences), possibly linked to other (hyper)texts, and so on and so forth. The reader can navigate through the whole text in a non-linear manner, by activating so-called hot links or anchor points that are linking some piece of text to some other.

These links are an obvious example of indexes, with a word pointing to (refering to) its definition or to some related piece of information. The WWW merely extends the basic notions of hypertext by making it possible for one index to refer to some physically-distant location on a remote computer somewhere else on the Internet, together, of course, with the ability to link to and therefore communicate images and sound. However in order to act as an index, a sign has to be recognized as such, i.e. the index has to exhibit itself as a reference. This is done in hypertext by marking the hot links in blue ink, in order to make the reader aware that he can jump to another piece of hypertext or image, therefore using a conventional symbol in order to ‘’show’’ the index as such.

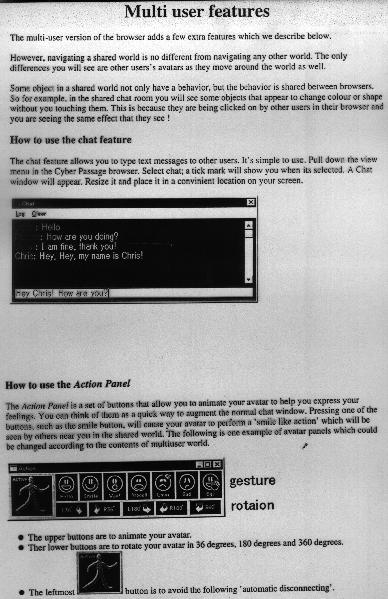

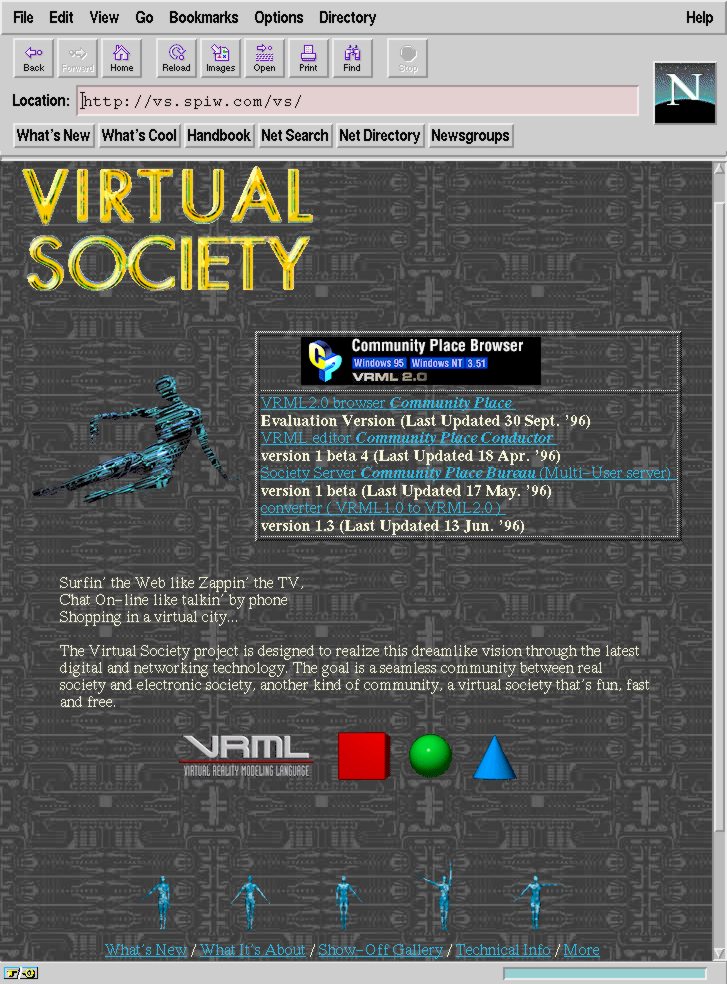

Web pages are usually full of small images that act as user-friendly and aesthetically appealing ways of navigating through the network. These are symbolic signs, in the sense that their object must be conventionally established in order to help the reader to orient himself in a homogeneous and unlimited cyberspace. In general, all pages at one Web site (physical/logical place hosted by some institution) are homogenized in order to use the same symbols to designate basic moves in the hypertext documentation (usually at the top or bottom of the pages), in such a way that the reader can quickly learn their conventional meaning. This can be seen in the example of the Sony Virtual Society home page. In this example images act as tautologies and duplicate the textual links below, which actually give their meaning to the pictures.

A web page from Sony's Virtual Society project

It could be interesting to relate the symbols used by the designers of Sony's page to some very old picture from Johannes Rombech's book on the Art of Memory, where one could find an image aimed at defining the optimal size of the locus to be used for a memory image : not too big and not too small, roughly a square of human size. Is it also the meta-discourse behind Sony's electronic navigation symbols ?

Johannes Rombech, Congestorius artificiosae memoriae (1520)

Another example (again from Sony) will show us that such symbols for hypertext links tend to become icons, as if it was their only means to get rid of the textual tautology.